The Project

Project Objective

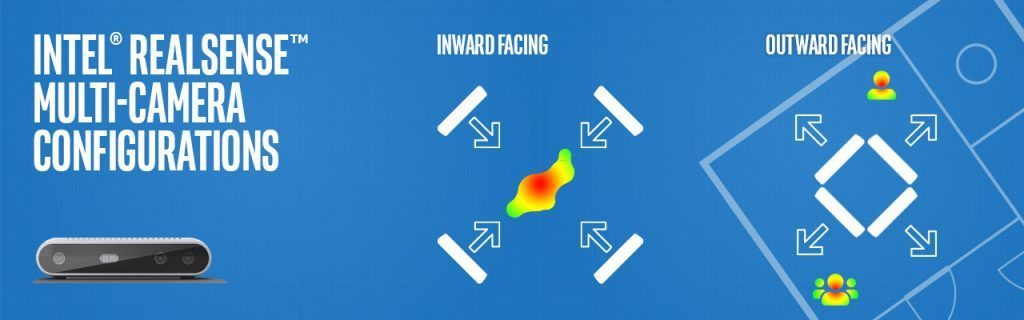

The objective of this work is to design and build a device that will allow for safe autonomous driving of a drone, with this drone needing to store data to be utilized in the future . The drone will utilize LiDAR sensors and Intel RealSense cameras in conjunction with the drone to provide safe flight for the drone. Along with this, the drone will utilize PX4 autopilot and ROS to allow for everything to communicate and create a safe environment for the drone. The general solution for this type of drone will involve utilizing an Intel NUC that will communicate with ROS (robot operating system). ROS will communicate with the drone, which has the PX4 Autopilot software included, to provide mapping and waypoints for the drone. The PX4 drone will be connected to the Intel RealSense camera and the LiDAR sensors to keep the drone safe, with the mapping and waypoints that are provided from ROS being a key factor in this. Once all of these components are working in conjunction with each other, the drone will be able to successfully traverse through a forest.

Project Sponsors

Client One: Dr. Truong Nghiem: Truong X. Nghiem is an Assistant Professor at the School of Informatics, Computing, and Cyber Systems at Northern Arizona University (NAU), USA. His research lies in the confluence of control, optimization, machine learning, and computation to address fundamental Cyber-Physical System (CPS) challenges across various domains.

Client Two: Dr. Alexander Shenkin: Alexander Shenkin is a plant and forest ecosystem ecologist in NAU's SICCS, that has interest and experience merging social, anthropological, and political perspectives of ecosystems and conservation. He employs novel field techniques coupled with modern statistical methods to address long-standing questions about nature at a range of scales; with his experience in electrical and software engineering enabling him to push the frontier where technology meets ecology.

Project Requirements

Requirement One: LiDAR sensors, Intel RealSense camera, and an Intel NUC must be able to be fit on a drone and connect to a drone’s power with no issues.

Requirement Two: The LiDAR sensor and Intel RealSense camera are integrated with the Intel NUC, with all three effectively utilizing software provided to our team by the CS team.

Requirement Three: Utilizing waypoints provided by the CS team, the Intel NUC must be able to effectively utilize the waypoints and communicate with PX4 Autopilot.

Requirement Four: Utilizing the 3D map that the CS team’s software creates, be able to have an off-the-shelf navigation system that can move through the 3D map.

Requirement Five: Have a second data stream that stores all of the data that is provided from the sensors of the drone. This data would be utilized after the flight to build 3D models.

Requirement Six: Have a small remote display screen that can look into the workings of the drone’s navigation system in real time.

Requirement Seven: Implement small RGB sensors and photogrammetry routines, utilizing ROS, to add to the data that LiDAR and Intel RealSense produce.

Requirement Eight: Extremely optimal exploration of a given 3D space, with this leading to sampling an entire region of a forest while avoiding any and all collisions.

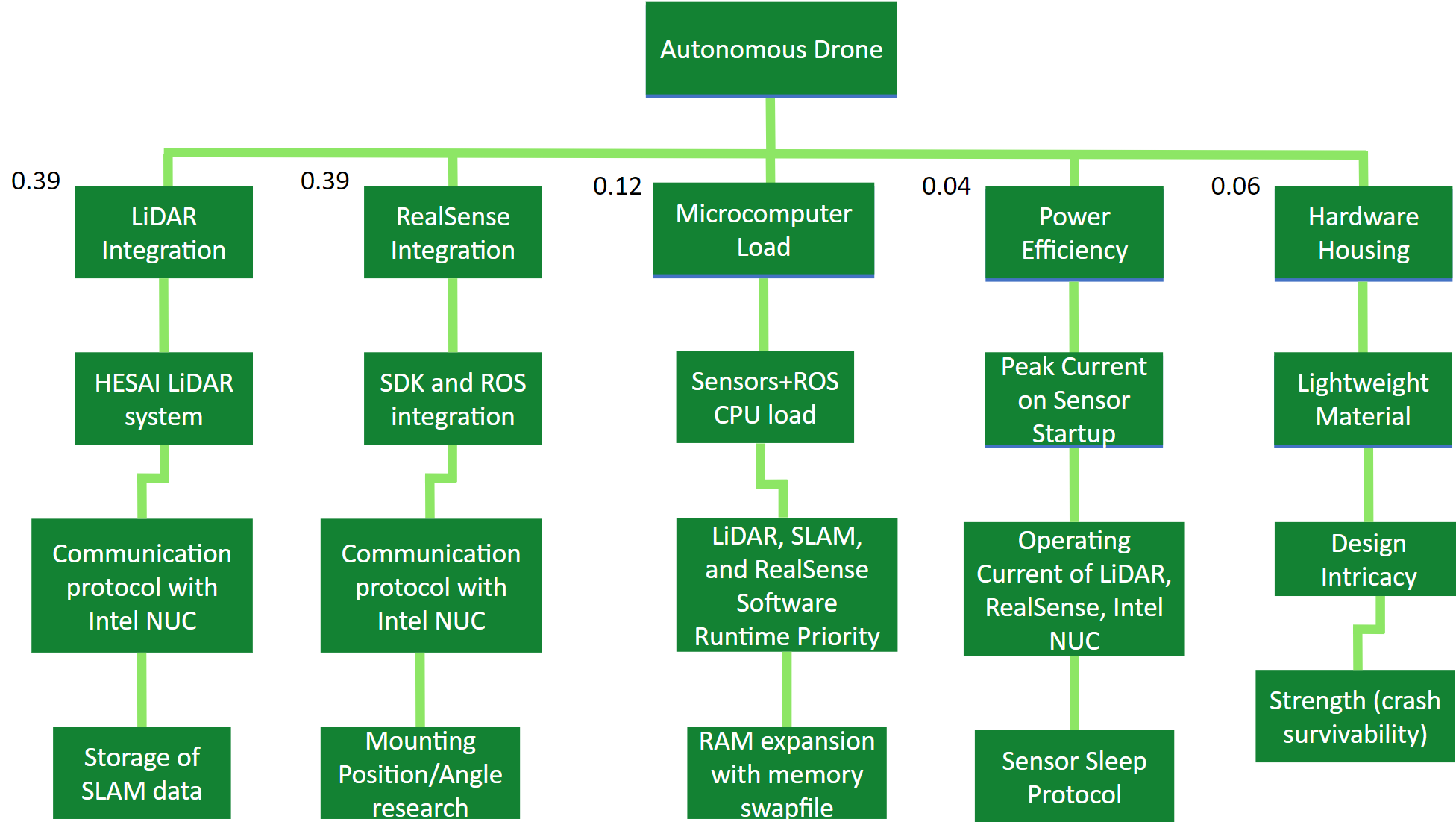

Objective Tree

Figure 3: Project Objective Tree

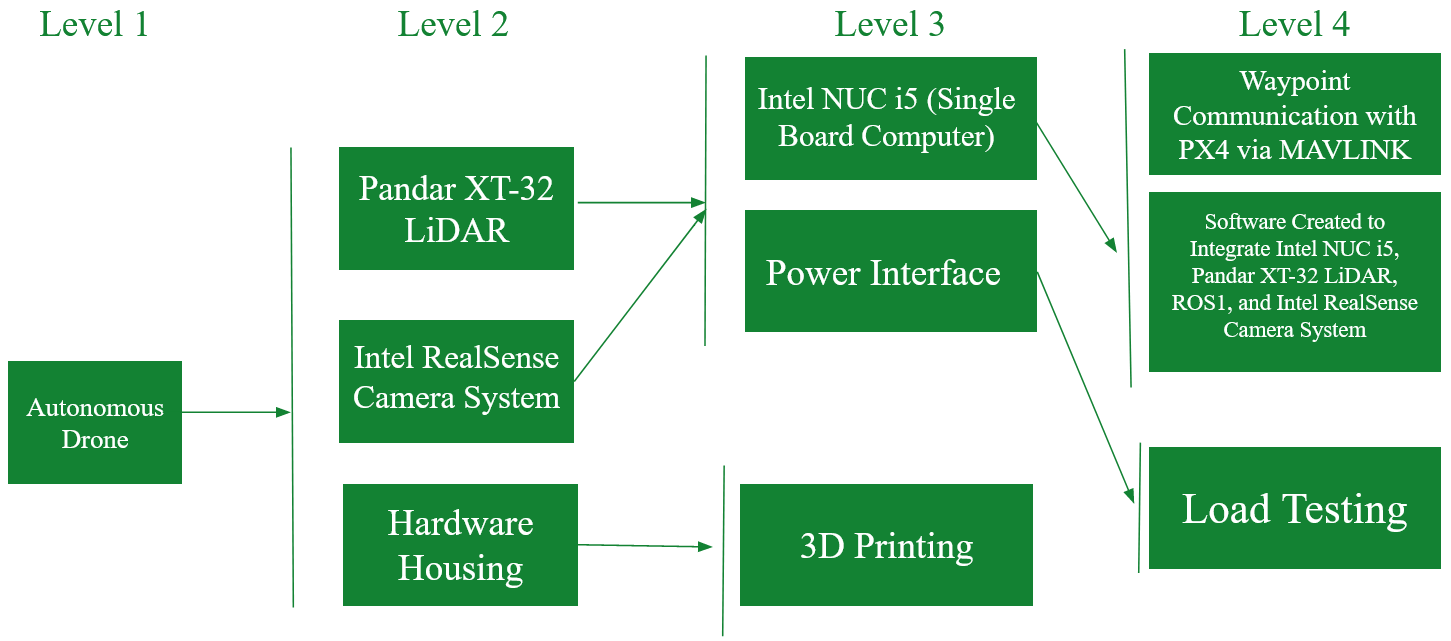

Work-Breakdown Structure (WBS)

Figure 4: Project WBS

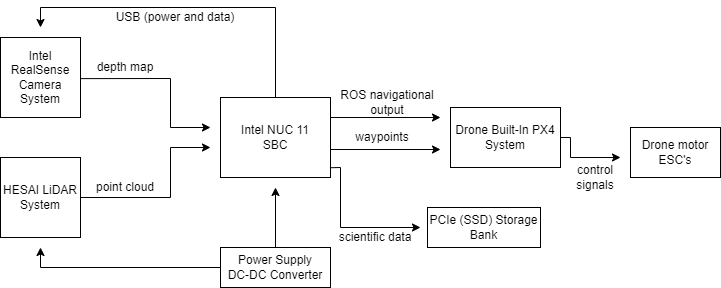

Project Design/Plan

Figure 5: Project Design/Plan

Project Components

Figure 6: Intel NUC i5

Figure 7: PX4 Autopilot Logo [3]

Figure 8: ROS (Robot Operating System) Logo [2]

Figure 9: Pandar XT-32 LiDAR

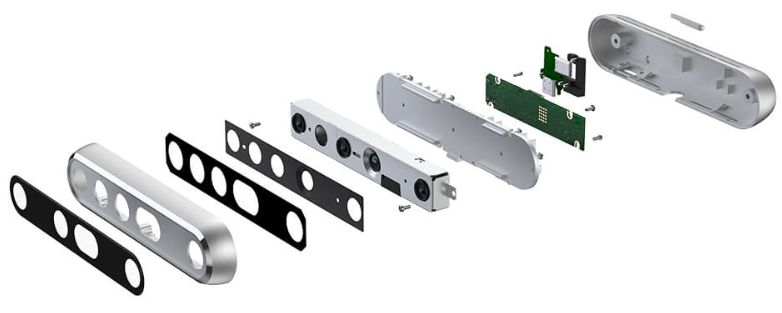

Figure 10: Intel RealSense Camera [4]