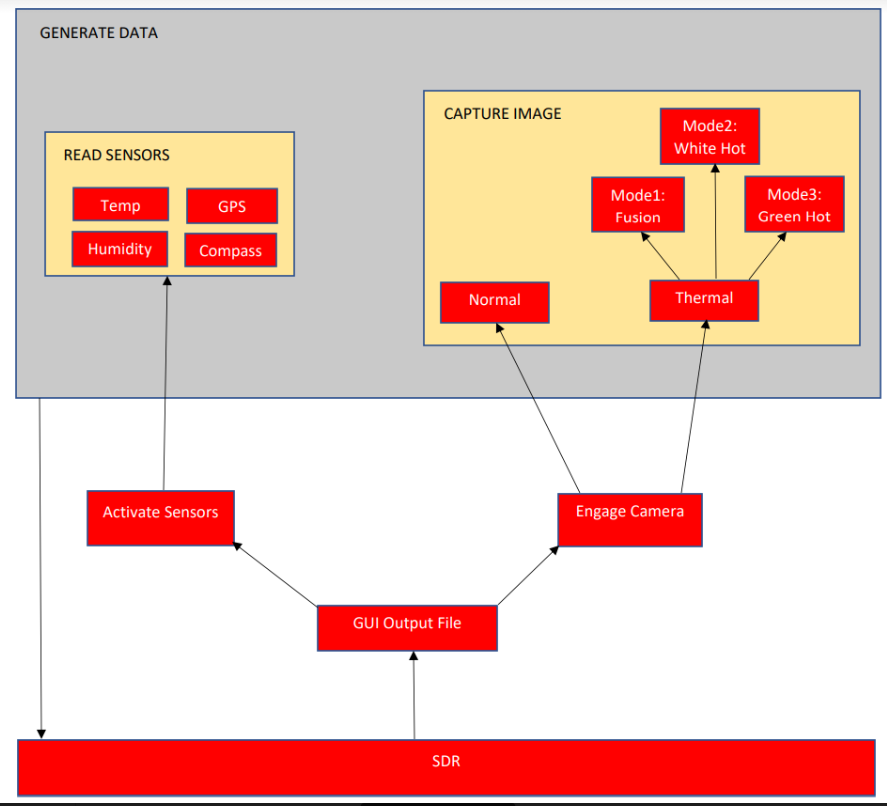

Current Project Design Progress

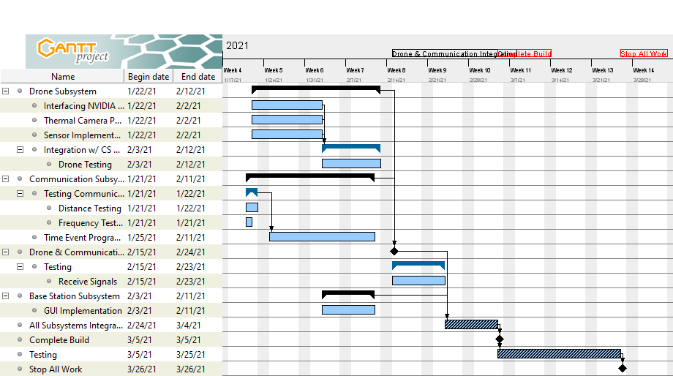

At the end of the project, our design team was able to complete all the tasks outlined on the Gantt chart except for the implementation of the GPS sensor. We experience difficulties interfacing the sensor with the UART serial

communication of the Nvidia Jetson Nano. Throughout this experience, we gained experience working the Nvidia Jetson Nano microcomputer, its GPIO pins and CSI port. We were able to work with the High Definition and thermal cameras, sensors, and Software Defined Radio. We gained skills programming in python, learned to work within our team and an interdisciplinary team, improved our critical thinking and problem solving.