Envisioned Solution

System Overview

The SSDynamics testing framework is designed to test SSDs through randomly generated test cases derived from TLA+ formal specification files.

This solution is built on an architecture that enables efficient test case generation, execution, and logging for SSDs. The system will:

- TLA+ File Processing: The framework will load TLA+ specification files containing multiple test scenarios. These files are central to defining the expected behavior of the SSD and its response to different instructions.

- Random Test Generation: Once the TLA+ file is loaded, the system will randomly select different tests from the provided scenarios. This ensures that the tests are diverse and test a wide range of behaviors and edge cases.

- Pluspy Integration: The system will integrate the Pluspy framework, allowing TLA+ specifications to be executed in Python. This integration will enable seamless conversion of test cases into commands that can be executed on the SSD.

- Command Execution: The system will generate NVMe commands (via `mvme-cli`) that are executed against the SSD. These commands will simulate various operations and stress the SSD in different ways.

- Error Logging and Traceability: The system will capture logs of all actions performed during the tests, providing traceability for each step and error that may occur. This will help in debugging and optimizing SSD behavior.

Technical Details

The system will leverage various tools and technologies to execute tests and handle results effectively:

- TLA+ Formal Verification: We use TLA+ for formal specification, which allows us to describe the behavior of the SSD in a mathematical manner. This ensures that our test cases are based on a solid, verified model.

- Pluspy Framework: This Python library allows us to execute TLA+ specifications and simulate the behavior of systems described by these specifications. Pluspy will be modified to suit our needs for SSD testing, ensuring accurate generation of test sequences from the TLA+ model.

- Python for Automation: Python scripts will handle the automation of generating test cases, executing NVMe commands, and logging results. The ease of Python scripting enables quick iterations and flexible test setups.

- MVME-CLI for SSD Interaction: `mvme-cli` is a tool that allows us to send NVMe commands to the SSDs. This will be central to our test execution, as we will use it to simulate different I/O operations on the SSDs, such as reads, writes, and error scenarios.

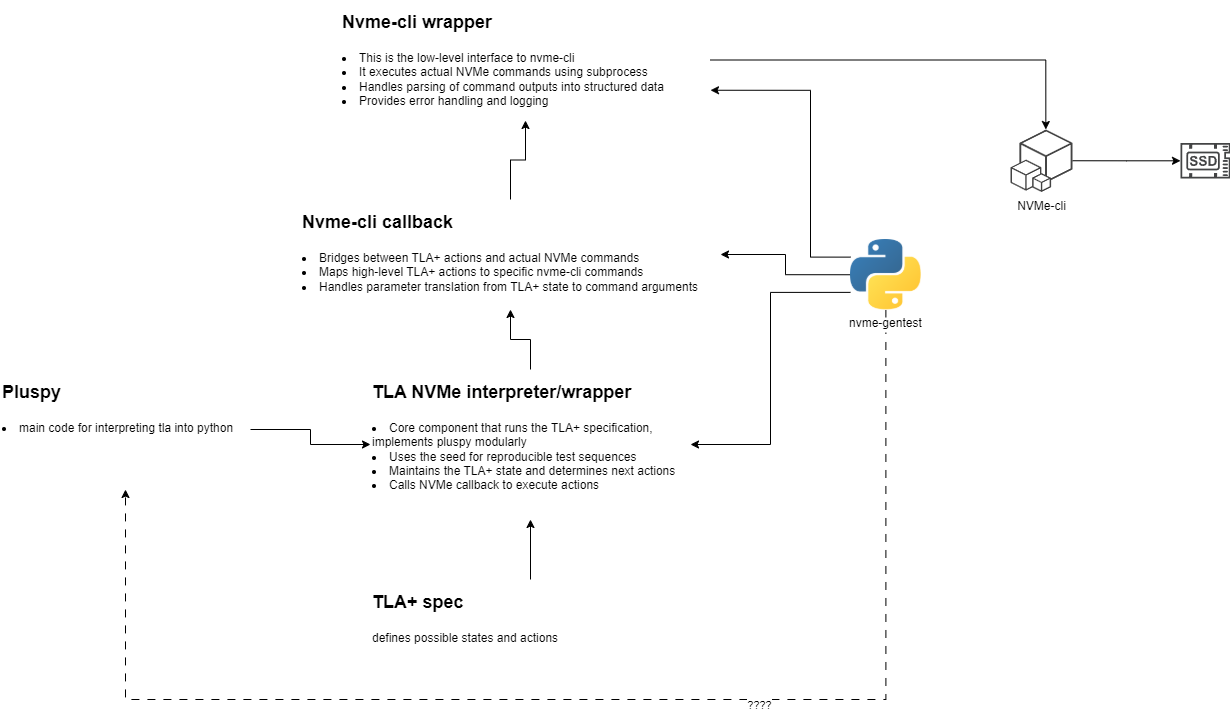

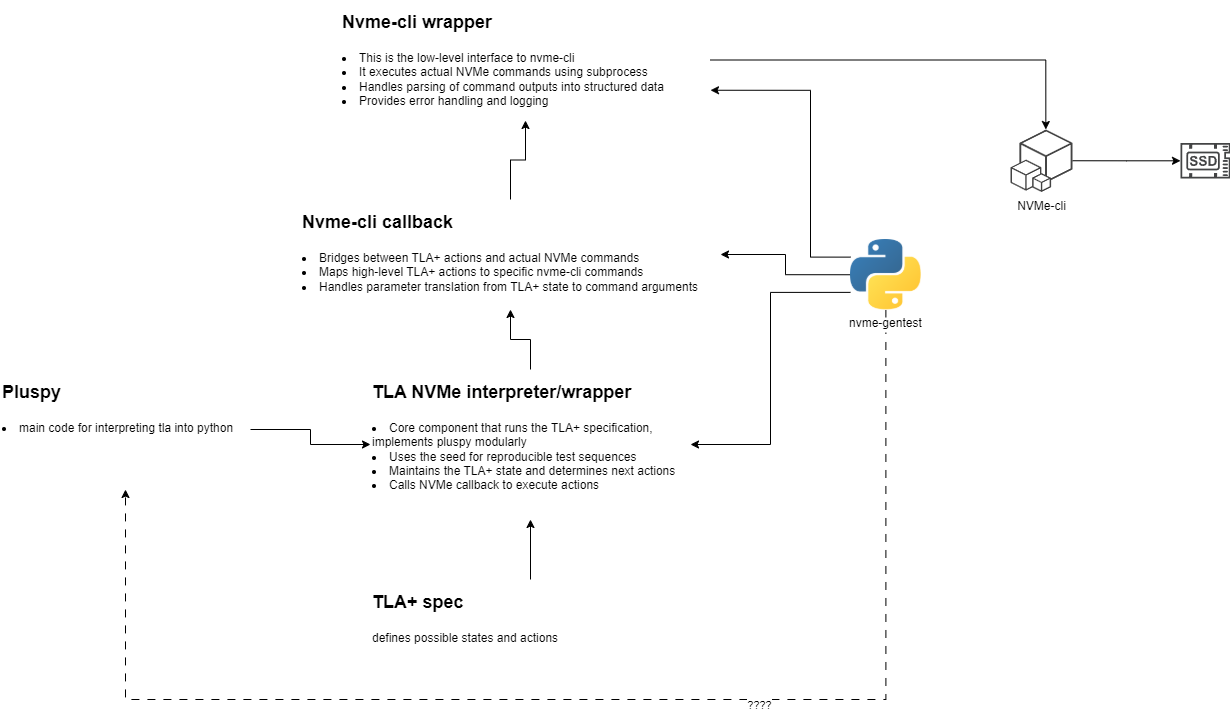

Architecture Diagram

Below is an overview of the system architecture, illustrating the flow of test execution and result logging:

Challenges and Considerations

While the solution is promising, several challenges need to be addressed:

- Test Coverage: Ensuring that all possible edge cases are covered in the randomly selected test cases is vital. We must ensure that our random generation doesn't miss critical failure modes or uncommon SSD behaviors.

- Performance Impact: The execution of numerous random tests can be resource-intensive, especially when dealing with large SSDs or complex workloads. Optimizing performance during test execution will be a key consideration.

- Error Detection: While logging errors and tracking actions is important, we also need to ensure that errors are captured in real-time, without significant delays. The system must be able to process and log errors efficiently.

- System Scalability: As SSDs evolve and new models are released, the testing framework must be adaptable. Future enhancements might include the ability to test newer SSD features or integrate with cloud-based SSD testing services.

Next Steps

Our next steps will focus on implementing the core framework, starting with TLA+ integration and command execution. We will then move on to random test generation and error logging features. Once the basic system is functional, we will conduct testing on various SSD models to validate the framework's effectiveness.