Project Overview

Millions of artists have had their artwork scraped from the internet and used to train AI systems

without credit or compensation. As AI evolves rapidly, many artists lack the tools or technical

knowledge to protect their work from automated scraping systems. This threatens not only individual

livelihoods but also the integrity of the creative industry.

ArtGuard is a web-based platform designed to inform, protect, and empower artists by giving them

accessible tools, up-to-date information, and a community space dedicated to pushing back against

unauthorized AI training. Built to scale beyond the limitations of local workshops and word-of-mouth

advocacy, ArtGuard transforms individual efforts into a unified, collaborative movement.

ArtGuard ultimately aims to create a safer digital space for artists by creating a community that educates artists about the current AI landscape.

The Problem

Currently, artists rely on scattered, outdated, or technical online sources to understand how AI

scraping works. Existing outreach efforts such as those run by our sponsor Andres Sepulveda

Morales, are limited to local events, small workshops, and social media posts. Without scalable

infrastructure, artists remain largely unaware of the risks AI poses to their work.

Artists face multiple challenges: fragmented information, no centralized reporting system, limited

visibility into which websites are safe, no real-time transparency tools, and very little community

support. With no coordinated digital space to learn, discuss, or track unsafe platforms, many artists

are left isolated and vulnerable.

In short, artists remain exposed to unauthorized AI training, educational efforts cannot keep pace

with technological change, trust in digital art sharing continues to erode, and advocacy cannot

scale without technological support.

Our Solution: The ArtGuard Platform

ArtGuard directly addresses these challenges by combining education, reporting, and community into

one unified platform. Its goal is to help artists understand risks, report misuse, and collaborate

with others on protection strategies. The platform is built around three core pillars:

1. Reporting Websites

Artists can submit reports about platforms they believe have misused their artwork. These reports

feed into a dynamic database and visual graph showing submission frequency, evidence counts, and

which websites are considered unsafe. This creates the first centralized, community-driven system

for identifying risky platforms and tracking misuse patterns.

2. Discussion Forums

A dedicated forum allows artists to talk openly about AI tools, share experiences, validate

reports, and collaborate on protection strategies. Instead of acting in isolation, artists gain

a community space where they can learn together and support each other in navigating AI-related

threats.

3. Educational Articles

ArtGuard includes a curated library of articles, explainers, guides, and updates covering how AI

scraping works, which tools exist to protect artwork, and how artists can respond when misuse is

detected. This replaces fragmented online sources with a single, trustworthy and regularly updated

educational hub.

Together, these three pillars create a powerful ecosystem: artists learn how AI scraping occurs,

report misuse when it happens, and discuss findings and strategies with their peers. With

additional features like real-time trust indicators and a planned browser extension, ArtGuard

strengthens transparency and awareness across the entire digital art landscape.

Objectives

Primary Goals

- Create an intuitive platform where users can analyze risk and file reports.

- Use those reports to give artists detailed statistics on which websites are allowing AI training on their work.

- Allow artists to discuss websites, tools, and protection services amongst their peers.

- Deliver educational tools and analytics for artists.

- Promote education for artists on AI and how it functions.

Technologies

The ArtGuard project demonstrates that it is technologically feasible to create a secure, interactive,

and user-friendly platform that empowers artists to protect their work from unauthorized AI use. Through

structured, data-driven evaluation of each major design challenge, we selected tools and frameworks that

reliably support performance, scalability, and usability goals.

Backend & Storage

- PostgreSQL — primary database, ensuring robust and reliable data storage.

- AWS Services — provides scalable hosting, storage, and backend support.

- Next.js + CMS + Discourse — powering content management, community features, and API integration.

Frontend

- Next.js — rendering the user interface with modern React-based tooling.

- Chart.js — powering visual analytics and reporting dashboards.

- Discourse — integrated community/forum features.

Together, this technology stack creates a cohesive and scalable system capable of supporting all critical

ArtGuard functionality.

Performance Goals

- Fast image upload and processing under 2 seconds.

- Database queries optimized for high concurrency.

- Responsive UI on desktop, tablet, and mobile.

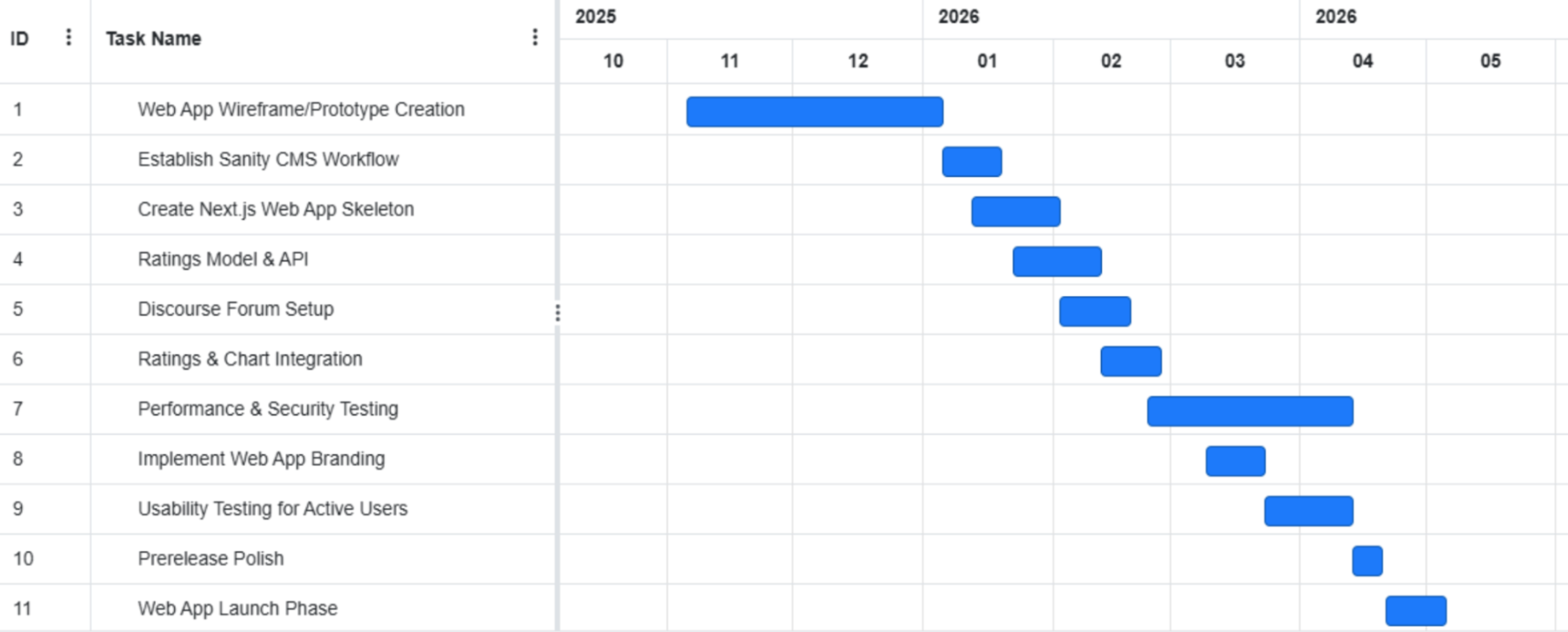

Schedule & Timeline

Below is our current project schedule, showing development milestones and planned deliverables

for the semester. As the project evolves, the timeline will be updated accordingly.

Currently we are well into the development of our projects prototype and working to finish this prototype by the end of the semester. We have been working on this prototype for

multiple months as seen in the Gantt Chart and are on track to finish by the end of the semester. Our team and our Client Andres are satisfied with our current progress

and are excited to continue work on this project.

Codebase

The codebase will be published publicly once initial development milestones are met.

Coming soon- GitHub repository link will appear here.