Project Description

Archeologists have been classifying ancient Native American pottery shards called sherds for many years but still tend to disagree. With up to 50% of archeologists disagreeing on classifications, a more consistent method is necessary. Our client, Dr. Leszek Pawlowicz, who studies the group of sherds called Tusayan White Ware, submitted a Capstone proposal to improve the consistency of classification, as well as ease the process of sherd classification for archeologists.

The goal of team CRAFT is to increase the consistency of classification streamline the process of classifying Tusayan White Ware pottery shards by archeologists in the lab and out in the field. We aim to accomplish this by improving upon the client’s current deep learning image classification model, then integrating this model into a mobile application for field classification, as well as a conveyor belt system for use in a lab environment.

Project Requirements

Mobile Application

The mobile application&aposs main functionality revolves around image classification using an Image Classification Model. It should be capable of classifying images from the camera or local storage, even without an internet connection. The app should save the results of these classifications in a database. Additionally, it should record the device&aposs geolocation where the classification is performed, allowing users to edit this location if necessary. Users should be able to edit classification results and view them with confidence levels. For offline scenarios, the app should buffer classified data until an internet connection is available, then upload the results to the database.

Conveyer Belt Application

The system should be able to classify pottery sherds as they pass through a conveyor belt using an image classification model. It must support bulk processing of multiple sherds, operate in real-time to keep up with the conveyor belt&aposs speed, and output all classified results into a data file. Additionally, the system should include image pre-processing capabilities to enhance classification accuracy and consistency.

Deep Learning Model

While the client’s current deep learning model achieves impressive accuracy, a higher level of accuracy is desired to convince archeologists to use our model over conventional human classification. While deep learning models are more consistent than humans, without a satisfactory level of accuracy archeologists will be unconvinced to change their ways.

Solution Vision

Mobile Application

Our solution for the mobile application envisions a intuitive application that leverages our AI model for image classification. Built using Flutter, the app offers seamless functionality for classifying images sourced from the camera or local storage, irrespective of internet connectivity. The app integrates with Firebase to store classification results securely in a database. A key feature is the inclusion of geolocation tagging, enabling users to record and edit the location where classifications are made. Users can also review and adjust classification results, complete with confidence levels displayed. To ensure uninterrupted usage, the app includes an offline data buffer, holding classified data until an internet connection is reestablished, at which point it seamlessly uploads the data to Firebase. This comprehensive solution combines advanced AI, robust mobile development, and reliable cloud infrastructure to deliver a user-friendly and efficient image classification experience.

Conveyer Belt Application

Our solution is a Python and OpenCV-based system using our Image Classification model for classifying pottery sherds on a conveyor belt. It employs our AI model for real-time, bulk processing, and outputs results into a data file. The system includes image pre-processing for accuracy and allows users to locally save bulk classification data.

Deep Learning Model

To improve the deep learning model&aposs accuracy and consistency for pottery sherd classification, we&aposll analyze its performance and optimize it. We&aposll focus on using newer models like ConvNext and Swin Transformers data quality, model architecture, and training methods, incorporating techniques like data augmentation and transfer learning. Regular evaluation and validation will ensure the model meets desired accuracy levels. We&aposll then demonstrate the improved performance to archeologists to encourage adoption.

Technologies Used

CRAFT utilizes the following technologies to deliver cutting-edge solutions:

Flutter

As Flutter is a cross-platform mobile development framework that allows you to build high-performance, visually attractive, and natively compiled applications from a single codebase, it is the perfect choice for our mobile application.

Python

Being a versatile and powerful programming language that is widely used in data science, artificial intelligence, web development, and a variety of other applications. This suits our needs for the conveyor belt application and for AI Model Training.

OpenCV

As OpenCV is an open-source computer vision and machine learning library that provides a wide range of tools and algorithms for image processing with having advantage with a large community support, it is the perfect choice for our conveyor belt application.

Tensorflow

Tensorflow is an open-source machine learning framework that is widely used for building and deploying powerful AI models. having inherited our sponsor&aposs codebase created in Tensorflow, we will continue to develop using Tensorflow.

Keras

High-level neural networks API, capable of running on top of TensorFlow, that allows for easy and rapid prototyping of deep learning models. As it provides a python interface for development of Neural Networks, and has great community support, this is the perfect choice for project.

Firebase

Firebase being a comprehensive app development platform that provides a suite of tools and services, including a nosql database, which will be used to store classification results from the mobile application for testing and validation

VSCode

Powerful and versatile code editor that provides a wide range of features and extensions to enhance your development workflow, from intelligent code completion to seamless integration with version control systems. This will significantly help in the development of the project.

GitHub

Github is one of the most popular version control systems that allows you to collaborate with your team. It is a perfect choice for our project as our team has great experience with github and it allows us to work on the project simultaneously and keep track of changes.

Project Schedule

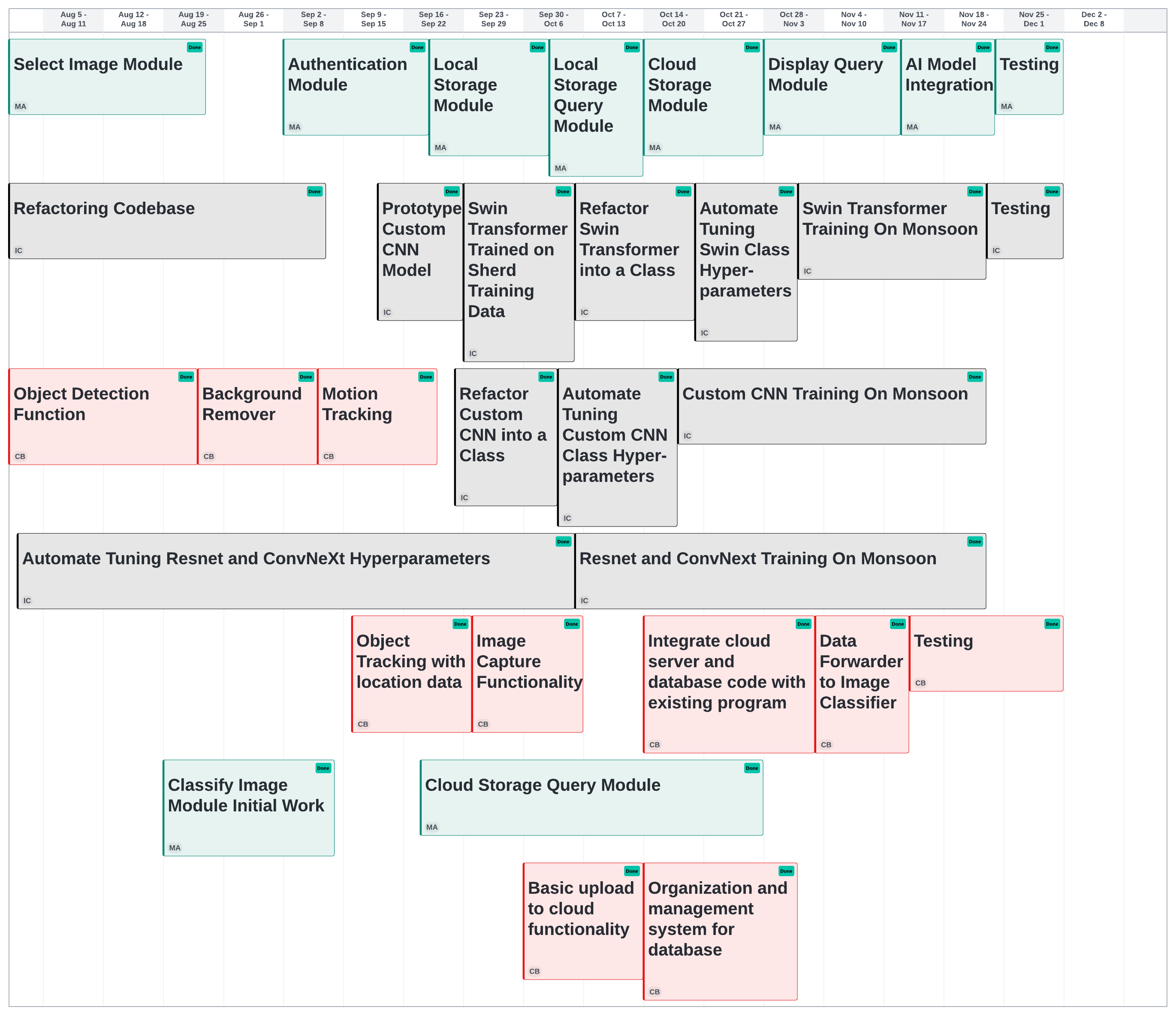

Project Timeline

The gantt chart above outlines a comprehensive development plan spanning from August to early December, structured across multiple modules and tasks categorized into different phases. The "Image Module" forms the foundation, starting with selection and progressing into development and testing. Concurrently, work on key functionalities like object detection, background removal, and motion tracking begins early, supported by efforts to refactor codebases and integrate machine learning models such as Swin Transformers and custom CNN models. Throughout September and October, emphasis shifts towards cloud storage, local storage queries, and advanced hyperparameter tuning for CNN and ResNet models. Integration efforts ramp up in November, focusing on AI, database management, and cloud functionalities, culminating in rigorous testing phases to ensure the system's robustness. The timeline demonstrates an iterative and modular approach, with overlapping tasks ensuring optimized resource utilization and steady progress toward delivering a functional and scalable solution.