Our Solution

We envision a robot that is able to move down a straight path while avoiding obstacles

that may be along the path. It will be able to do this without any human input. The envisioned fully autonomus robot will

require modules of movement, obstacle avoidance, and navigation. For our solution the autonomous movement and obstacle

avoidance are the required first steps to achieving this.

Requirements

For gathering our requirements we had weekly meetings with our client, Dr. Leverington, and asked questions of what he envisioned

the robot was capable of. For each high-level requirement, we had to break it down into more detailed lower level requirements

by asking more specific questions like how fast the robot should be able to complete some feature. Here are some of the high level

requirements that we have gathered.

Functional Requirements

The robot will be able to move autonomously

The robot will be able to detect obstacles and avoid them while keeping on its path

The robot will be able to recognize the end of the path and stop

Performance Requirements

The robot will be able to move and complete this path at the pace of human walking speed (~1.4 meters per second)

The robot must detect obstacles from one meter to three meters away

The robot must be able to detect obstacles that are at least 1/2 meters tall

Environmental Requirements

The Raspberry Pi must be used as the main controller and computer for the robot

The hardware that allows the robot to function must be contained within or on the thirty gallon barrel

Any needed sensor or parts for this project must stay under the total $300 budget limit set

Techonologies

In order to complete these two modules, we had to find the solutions to our technological challenges

Control Unit: Raspberry Pi 4B

As the central computer for the robot, we will be using a Raspberry Pi. It will be responsible for processing the algorithm

for obstacle avoidance, and for sending the signals to the motors to make the robot move. Future modules will be able to be implemented

on the Raspberry Pi as well.

Obstacle Avoidance: Xbox 360 Kinect

For our obstacle avoidance module, we needed a sensor that will be able to provide enough data while at an

efficient price point. The Xbox 360 Kinect is a camera sensor that also has an infrared sensor that can be used to process

and receive depth data. Using this depth data we can find the distance to objects infront of the sensor. Upon finding

objects that are too close to the sensor, the robot will slow down and reroute itself around the object.

Hallway Detection: Raspberry Pi Camera

The detection of each end of the hallway is done through the use of a Raspberry Pi Camera module. This small camera will be

facing forward on the robot. These images from the camera will be taken and used within our computer vision module to determine

where the robot is in the hallway

Computer Vision: OpenCV/Keras/TensorFlow

In order to detect each end of the hall, we use the Raspberry Pi Camera's images along with computer vision. The library that we are using for computer vision

is OpenCV. Along with this, we used a Deep Convolutional Neural Network in order to train the robot and make it learn what each end of the hallway looks like.

Images are taken from the camera and given to the deep learning model. This model then predicts what end of the hallway it thinks the robot is at

based on the image. This deep learning model utilizes the Keras and TensorFlow libraries.

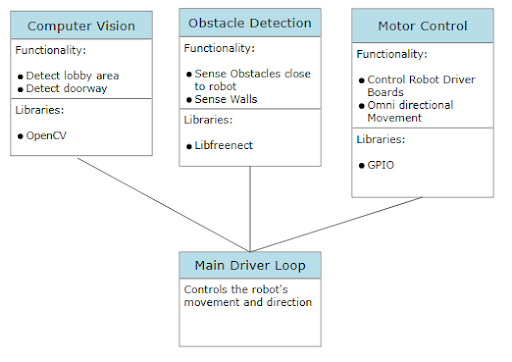

Robot Architecture

Above is the robot's architecture as mentioned. These are the main modules or components that we use to complete this project.

There is the computer vision module which is responsible for getting the images from the Raspberry Pi Camera and using them to predict

which end of the hall the robot is at. The obstacle detection module uses the Kinect sensor and its depth image to determine

how close obstacles are to the robot. Lastly, the motor control module is responsible for the hardware and software connection between the

Raspberry Pi and the motor drivers. These components are wired together, then through software we can send signals to the motor drivers, and

control the robots movement in any direction and speed. All three components are utilized together to create the main program loop.

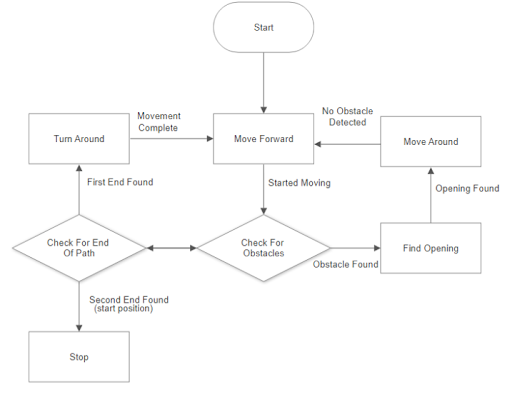

The Program Implementation Loop.

This image shows the master loop that the program runs in order to avoid obstacles and make it to each end of the hall. We start by moving

the robot forward and looking for any obstacles in the way. If we do encounter an obstacle, we take the necessary steps to navigate

around it. The program then continues by moving forward and looking for the next obstacle again. If there is not an obstacle in the way, we check to see if we have reached either end of the hall. If we find the first end

of the hall, we turn the robot around and continue in the opposite direction. If the second end of the hallway has been found or the

original starting position, we stop the robot and end the program.

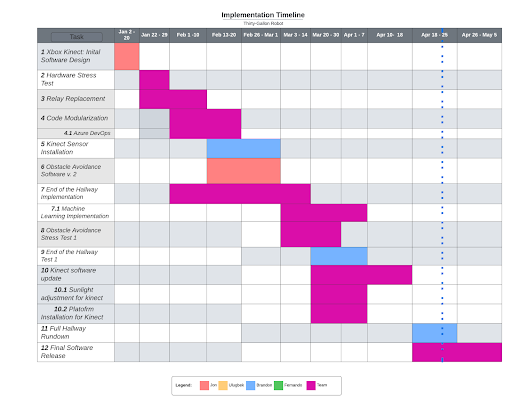

Schedule

This was our schedule of the project represented by this Gannt Chart. In the start of the spring semester, we ran into an issue

in which one of the relays that allowed power from the batteries to the motors broke. This had to be fixed by an EE student. While

this was happening we modularized our code base to make it easier for the next capstone teams to call upon our obstacle avoidance

module instead of implementing their modules directly with ours. We also had to implement the end of the hall detection. We

encountered another issue in which we learned the Kinect sensor would read many false positives looking down the hallway in the Engineering building.

This was likely because of direct sunlight and reflections within the hall. We took this into account in our obstacle avoidance

system and we were able to finish our software and release it to our client.